import re

from typing import Any, Dict, List, Tuple

import urllib.request

from importlib.metadata import version

import tiktoken

import torch

from torch.utils.data import Dataset, DataLoaderDataloader Creation

This notebook explores dataset/dataloader creation techniques based on Sebastian Raschka’s book (Chapter 2), implementing data sampling, batching, and other techniques.

Dataset

Borrowed from Manning’s Live Books

Acknowledgment

All concepts, architectures, and implementation approaches are credited to Sebastian Raschka’s work. This repository serves as my personal implementation and notes while working through the book’s content.

Resources

# Verify library versions.

print("tiktoken version:", version("tiktoken")) # expected: 0.7.0tiktoken version: 0.7.0Fetch sample data

# Download sample data to a text file.

local_filename = "data/the_verdict.txt"

url = (

"https://raw.githubusercontent.com/rasbt/LLMs-from-scratch/main/ch02/01_main-chapter-code/"

"the-verdict.txt"

)

urllib.request.urlretrieve(url, local_filename)

# Read the text file into a string.

with open(local_filename, "r", encoding="utf-8") as f:

raw_text = f.read()

print("Total number of character:", len(raw_text))

# Encode the text.

tokenizer = tiktoken.get_encoding("gpt2")

enc_text = tokenizer.encode(raw_text)

print("Total number of tokens:", len(enc_text))Total number of character: 20479

Total number of tokens: 5145Sample some text

# Skip the first 50 tokens (for a slightly more interesting example).

enc_sample = enc_text[50:]

# Show tokens in a context window of size 4.

# NOTE: The context size determines how many tokens are included in the input.

context_size = 4

x = enc_sample[:context_size]

y = enc_sample[1 : context_size + 1]

print(f"x: {x}")

print(f"y: {y}")x: [290, 4920, 2241, 287]

y: [4920, 2241, 287, 257]# Show input-output pairs for a given context size.

show_text = True

for i in range(1, context_size + 1):

context = enc_sample[:i]

desired = enc_sample[i]

if show_text:

print(tokenizer.decode(context), "---->", tokenizer.decode([desired]))

else:

print(context, "---->", desired) and ----> established

and established ----> himself

and established himself ----> in

and established himself in ----> aDataset

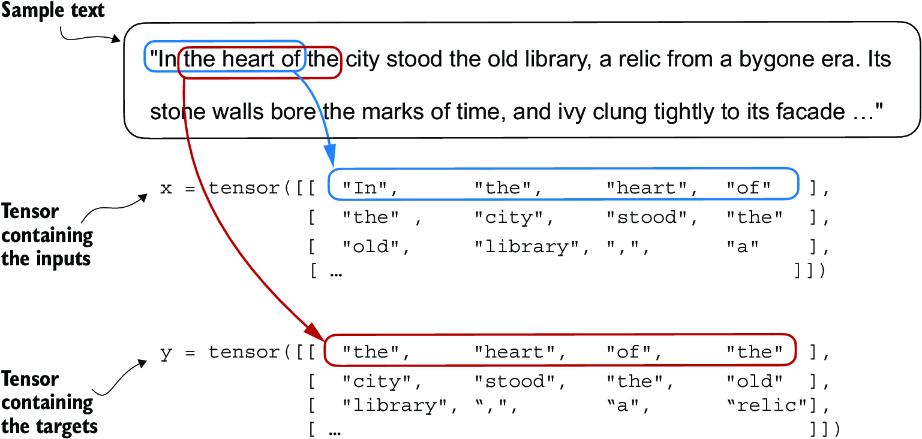

Datasets are created using a sliding window approach as illustrated below.

Note that each sample (input tensor x and target tensor y) contains multiple training samples. For example the first row:

- x[0][0] = [“In”, “the”, “heart”, “of”]

- y[0][0] = [“the”, “heart”, “of”, “the”]

would map to multiple training samples x -> y as follows:

- “In” –> “the”

- “In the” –> “heart”

- “In the heart” –> “of”

- “In the heart of” –> “the”

class GPTDatasetV1(Dataset):

def __init__(self, text: str, tokenizer: Any, max_length: int, stride: int):

# Initialize inputs / outputs.

self.input_ids = []

self.target_ids = []

# Argument validation.

assert stride > 0, "Stride must be greater than 0."

assert max_length > 0, "max_length must be greater than 0."

assert len(text) > 0, "text must be a non-empty string."

assert stride <= max_length, (

"Stride cannot be larger than max_length, otherwise some input text will "

"be skipped during tokenization."

)

# Tokenize the entire input text.

token_ids = tokenizer.encode(text)

# Use a sliding window approach to create input/output pairs.

# NOTE: The maximum sequence length produced is determined by 'max_length'.

# NOTE: If stride < max_length, the window (chunks) will overlap.

for i in range(0, len(token_ids) - max_length, stride):

input_chunk = token_ids[i : i + max_length]

target_chunk = token_ids[i + 1 : i + max_length + 1]

self.input_ids.append(torch.tensor(input_chunk))

self.target_ids.append(torch.tensor(target_chunk))

def __len__(self) -> int:

"""Return the total length of the dataset.

NOTE: This is a required method for PyTorch Datasets.

"""

return len(self.input_ids)

def __getitem__(self, idx: int) -> Tuple[torch.tensor, torch.tensor]:

"""Access an item at a given index.

NOTE: This is a required method for PyTorch Datasets.

"""

return self.input_ids[idx], self.target_ids[idx]

dataset = GPTDatasetV1(text=raw_text, tokenizer=tokenizer, max_length=10, stride=5)

dataset[0](tensor([ 40, 367, 2885, 1464, 1807, 3619, 402, 271, 10899, 2138]),

tensor([ 367, 2885, 1464, 1807, 3619, 402, 271, 10899, 2138, 257]))Dataloader

Before being able to turn tokens into embeddings, we need to implement an efficient dataloader.

A dataloader provides the following functionality:

- Efficient iteration over the dataset

- Batching and shuffling functionality

Internally, the dataloader will operate on tensors that contain tokens (and not the raw text). A sample returned from the dataloader will contain an input tensor (a sequence of tokens) and a target tensor (the next word in the sequence).

def create_dataloader_v1(

text: str,

batch_size: int = 4,

max_length: int = 256,

stride: int = 128,

shuffle: bool = True,

drop_last: bool = True,

num_workers: bool = 0,

):

"""Create a dataloader from a given text.

Args:

text: The input data to create a dataset from.

batch_size: The number of samples to group into a batch.

max_length: The maximum number of tokens to store in each sample.

stride: The stride determines how far we slide the sliding window at each sampling step. A

stride of 1 slides the window by one token. A stride < max_length results in

overlapping input samples while a stride > max_length leads to input tokens being

skipped (i.e. not be contained in any input sample). A stride == max_length utilizes

the data fully without any overlap or skipping (overlapping samples can lead to

increased overfitting during model training).

shuffle:

drop_last: If set, the last batch of data is dropped if it is shorter than the specified

batch size (to prevent loss spikes during training).

num_workers: The number of CPU processes to use for data preprocessing.

"""

# Instantiate a tokenizer.

tokenizer = tiktoken.get_encoding("gpt2")

# Create the dataset from the input text.

dataset = GPTDatasetV1(

text=text, tokenizer=tokenizer, max_length=max_length, stride=stride

)

dataloader = DataLoader(

dataset,

batch_size=batch_size,

shuffle=shuffle,

drop_last=drop_last,

num_workers=num_workers,

)

return dataloader# Test dataloader creation.

with open(local_filename, "r", encoding="utf-8") as f:

raw_text = f.read()

dataloader = create_dataloader_v1(

text=raw_text, batch_size=1, max_length=4, stride=1, shuffle=False

)

data_iter = iter(dataloader)

first_batch = next(data_iter)

print(first_batch)[tensor([[ 40, 367, 2885, 1464]]), tensor([[ 367, 2885, 1464, 1807]])]Token embeddings

Converting tokens to token embeddings requires the initialization of embedding weights. Most commonly, these embedding weights are just initialized with random values. These weights are optimized during LLM training.

A token embedding layer can be thought of as a trainable lookup table that maps a vocabulary of size N to vectors of embedding dimension T. Each token that is part of the vocabulary is mapped to a vector of dimension T.

For reference, the vocabulary of the BPE tokenizer is of size 50,257. The embedding dimension of GPT-3 is of size 12,288.

Note that embedding layers can be thought of as a more efficient implementation of one-hot encoding followed by matrix multiplication in a fully connected layer (see supplementary code on “Understanding the Difference Between Embedding Layers and Linear Layers”)

Embedding layers perform a lookup operation (as shown above), retrieving the embedding vector corresponding to the token ID from the embedding layer’s weight matrix. For instance, the embedding vector of the token ID 5 is the sixth row of the embedding layer weight matrix (it is the sixth instead of the fifth row because Python starts counting at 0).

# Sample embedding.

vocab_size = 6

output_dim = 3

# The embedding layer is of shape 6 x 3 which maps a vocabulary of size 6 to an embedding space of

# dimension 3. The weight matrix, therefore, has six rows and three columns which means there is one

# row in the matrix for each token in the vocabulary.

torch.manual_seed(123)

embedding_layer = torch.nn.Embedding(vocab_size, output_dim)

print(embedding_layer.weight)

print(embedding_layer.weight.shape)

# Apply the embedding layer to a single token ID.

# NOTE: This is essentially a lookup operation that retrieves a given row from the embedding

# layer's weight matrix.

print(embedding_layer(torch.tensor([3])))

# Apply the embedding layer to multiple token IDs in batch.

print(embedding_layer(torch.tensor([2, 3, 5, 1])))Parameter containing:

tensor([[ 0.3374, -0.1778, -0.1690],

[ 0.9178, 1.5810, 1.3010],

[ 1.2753, -0.2010, -0.1606],

[-0.4015, 0.9666, -1.1481],

[-1.1589, 0.3255, -0.6315],

[-2.8400, -0.7849, -1.4096]], requires_grad=True)

torch.Size([6, 3])

tensor([[-0.4015, 0.9666, -1.1481]], grad_fn=<EmbeddingBackward0>)

tensor([[ 1.2753, -0.2010, -0.1606],

[-0.4015, 0.9666, -1.1481],

[-2.8400, -0.7849, -1.4096],

[ 0.9178, 1.5810, 1.3010]], grad_fn=<EmbeddingBackward0>)Position embeddings

The embedding layer has no notion of position or order of tokens within a sequence. The same token ID always gets mapped to the same vector representation regardless of position in the sequence. That shortcoming is commonly addressed via position embeddings that add are added to the token embedding.

The embedding layer converts a token ID into the same vector representation regardless of where it is located in the input sequence. For example, the token ID 5, whether it’s in the first or fourth position in the token ID input vector, will result in the same embedding vector.

Position embeddings fall into one of two categories:

- relative position embeddings: these encode the relative distance between tokens (or “how far apart” tokens are). The advantage is that models can generalize better to sequences of varying lengths.

- absolute position embeddings: associated with a specific position in the sequence. For each position in the input sequence, a unique embedding is added to the token embedding.

In the above example of absolute positional embeddings are added to the token embedding vector to create the input embeddings for an LLM. The positional vectors have the same dimension as the original token embeddings.

For reference, OpenAI’s GPT uses absolute positional encodings that are optimized during training rather than defined in a fixed manner.

# A full example of token embedding + absolute positional embedding.

# Create an embedding layer.

# NOTE: The vocab size is that of the BPE tokenizer.

vocab_size = 50257

output_dim = 256

token_embedding_layer = torch.nn.Embedding(vocab_size, output_dim)

# Create a dataloader.

max_length = 4

dataloader = create_dataloader_v1(

raw_text, batch_size=8, max_length=max_length, stride=max_length, shuffle=False

)

data_iter = iter(dataloader)

inputs, targets = next(data_iter)

print(f"Token IDs: {inputs}")

print(f"Inputs shape: {inputs.shape}")

# Embed inputs.

# NOTE: Each input token is mapped to an embedding vector of dimension 256.

token_embeddings = token_embedding_layer(inputs)

print(f"Embedding shape: {token_embeddings.shape}")

# Create an absolute position embedding layer.

# NOTE: The embedding layer has shape 4 x 256, i.e. the same embeddings will be added to each

# batch of data.

# NOTE: The input to the pos_embeddings is usually a placeholder vector torch.arange(context_length),

# which contains a sequence of numbers 0, 1, ..., up to the maximum input length –1. The

# context_length is a variable that represents the supported input size of the LLM.

context_length = max_length

pos_embedding_layer = torch.nn.Embedding(context_length, output_dim)

pos_embeddings = pos_embedding_layer(torch.arange(context_length))

print(f"Position embedding layer shape: {pos_embeddings.shape}")

# Add position embeddings to token embeddings.

input_embeddings = token_embeddings + pos_embeddings

print(f"Input embeddings shape: {input_embeddings.shape}")Token IDs: tensor([[ 40, 367, 2885, 1464],

[ 1807, 3619, 402, 271],

[10899, 2138, 257, 7026],

[15632, 438, 2016, 257],

[ 922, 5891, 1576, 438],

[ 568, 340, 373, 645],

[ 1049, 5975, 284, 502],

[ 284, 3285, 326, 11]])

Inputs shape: torch.Size([8, 4])

Embedding shape: torch.Size([8, 4, 256])

Position embedding layer shape: torch.Size([4, 256])

Input embeddings shape: torch.Size([8, 4, 256])Example of a full input embedding pipeline

As part of the input processing pipeline, input text is first broken up into individual tokens. These tokens are then converted into token IDs using a vocabulary. The token IDs are converted into embedding vectors to which positional embeddings of a similar size are added, resulting in input embeddings that are used as input for the main LLM layers.